Gov't lid on deepfake sex?

First Amendment in prosecutor's crosshairs; Harris crowd shot looks fake

A move to censor deepfake sex is colliding with the First Amendment as the new AI imaging technologies trigger yet another battle for control of the internet.

San Francisco City Attorney David Chiu is asking a court to order the shutdown of websites that permit users to "nudify" people without their consent.

Persons who profit from such technology are "bad actors" who must be censored in order to protect the "victims."

https://www.sfcityattorney.org/2024/08/15/city-attorney-sues-most-visited-websites-that-create-nonconsensual-deepfake-pornography/

https://www.sfcityattorney.org/wp-content/uploads/2024/08/Complaint_Final_Redacted.pdf

Chiu cites a California middle school incident in which some boys used such a website to create nude images with the heads of girl classmates spliced on.

"This disturbing phenomenon has impacted women and girls in California and beyond, causing immeasurable harm to everyone from Taylor Swift and Hollywood celebrities to high school and middle school students," says Chiu. "For example, in February 2024, AI-generated nonconsensual nude images of 16 eighth-grade students were circulated among students at a California middle school."

Nowhere in his statement and filing does Chiu mention the issue of prior restraint of the expression of artwork, which is what he seeks. Nor does he say how he intends to have the state distinguish between “real art” and non-art.

Further, he does not say HOW Taylor Swift and numerous other people have been harmed by deepfake images. If they feel embarrassed, that would in his mind constitute harm. Yet, anyone who feels victimized already has a remedy: libel and defamation lawsuits can be lodged.

Of course, there is likely only a short window of time during which juries will think deepfake targets have been truly victimized. That's because the spread of such technology means that sex images will be everywhere greeted with great skepticism as to their authenticity. They will be worth a few laughs and not much more.

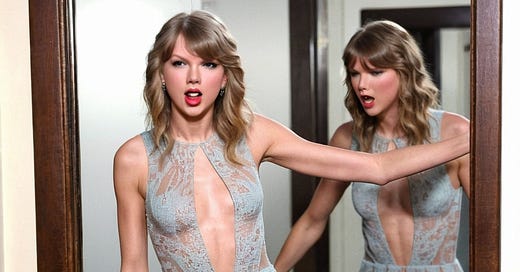

I went to a free AI imaging site, and with a little experimentation got past the NSFW filter to obtain nudified Swift images. Below is one in which the singer's clothing is appropriately scanty, but not beyond current entertainment standards. The site says you must pay to make NSFW (not safe for work) imagery. Yet OpenArt.ai, a major firm, is not on Chiu's list of companies he wants muzzled.

From OpenArt.ai

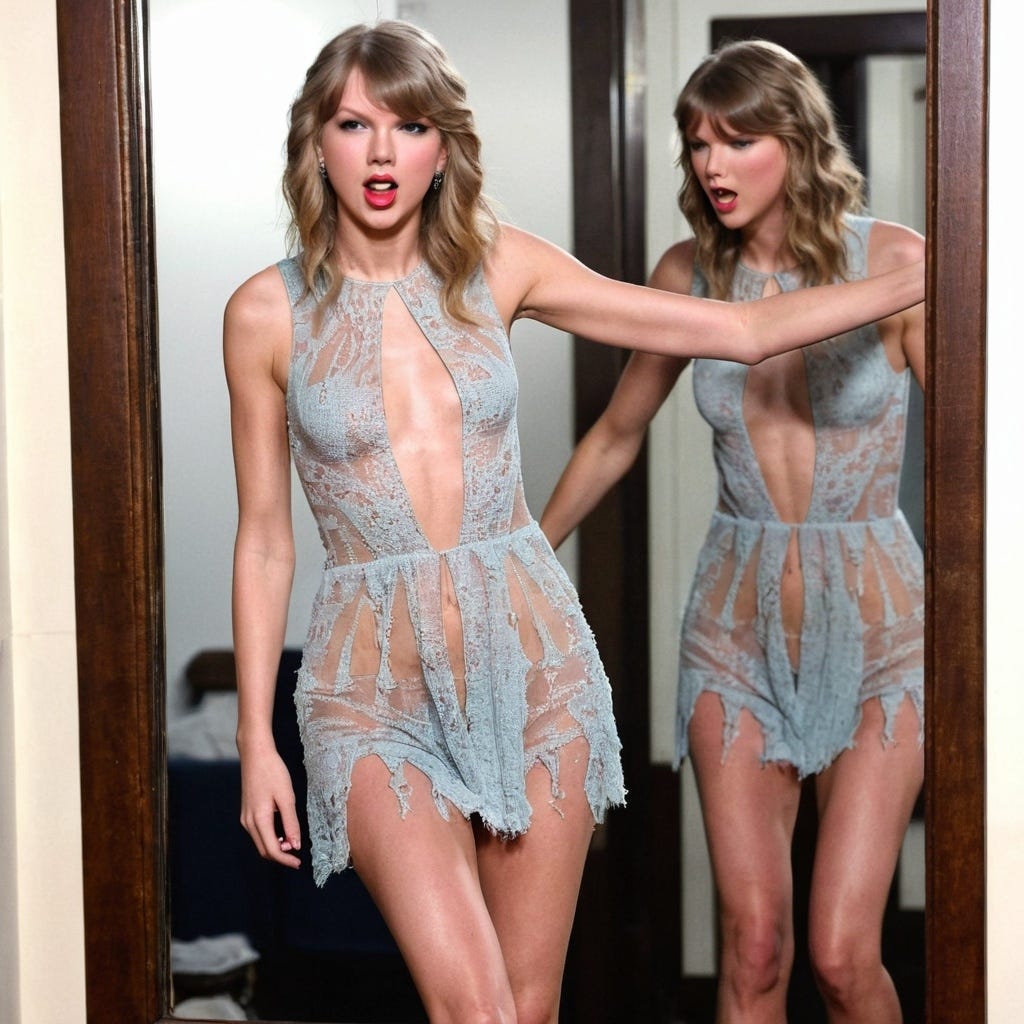

Even easier was Dezgo.com, a cooperative site which doesn’t restrict prompts or images. That site’s imagery has improved greatly, and it was a snap to obtain Taylor nudies. Below is a non-NSFW example.

From Dezgo.com

Chiu has chosen a set of small fry, some with foreign connections, and avoided offending the big guns, like Google, which publishes all sorts of AI deepfake sex images, though those of Swift no longer appear. Yet, it is hard for me to believe that Swift has suffered terrible damage because people "misuse" her image. People have been "misusing" images from long before AI. It seems that American society was able to cope with it.

Now I did not attempt to nudify a non-celebrity. But, I am well aware there is plenty of AI image editing technology available that makes seamless splicing of heads to bodies a snap. Chiu quite obviously hasn't sued any AI editing firms, tho he has redacted the names of the websites, probably to bar "bad actors" from testing the sites.

But, without using Swift’s name in the written prompt, I was able to use OpenArt’s image-to-image generator to transform Swift wearing a dress to Swift in a bikini. In fact, I was able to nudify her, with no charge, without using her name. In other words, it is already a simple matter to sex up an ordinary person’s image.

Legitimate publicity shot

Sexed-up AI shot based on image above

Partial image of fully disrobed AI woman

Legitimate image of Swift, who is known for working her sex magic to the hilt.

Chiu says he is trying to block, among other things, "child pornography." The trouble with that idea is that there is no child who has been sexually abused. Hence, the idea is that bad thoughts must be prevented lest they tend to promote actual abuse. Tho child porn laws were written to prohibit any depiction of child sex abuse, their purpose was to block traffickers from saying the depicted person's youthfulness was not proof, that they might have been of age.

Consider all the graphic murder news, fiction and entertainment that Americans consume. Using the standard that such depictions can well promote more murders, censors could shut down 90 percent of the press and entertainment business.

The important point here is that there are NO people in these machine images. Depicted are fantasies, clever illustrations. No person is actually harmed if you have "bad thoughts" when you look at AI porn. The church has a right to frown on AI porn. The state has no such right.

One more observation: Nearly all Americans detest the sexual abuse of children -- tho it happens with depressing frequency. Child porn traffickers have greatly benefited financially from such abuse. But, if the demand for such imagery is slaked with deepfakes, the financial pressure to sexually abuse real kids plummets.

+++++++++++++++++++++++

Harris rally pic LOOKs like deepfake

After Donald Trump's camp scorned a Kamala Harris rally photo as an AI deepfake, her supporters rushed to rebut the claim, with Ars Technica going so far as to call Trump a liar.

https://arstechnica.com/ai/2024/08/the-many-many-signs-that-kamala-harris-rally-crowds-arent-ai-creations/2/

I ask the reader to take a careful look at the photo below. Does it look authentic? My first thought is, how did all those people get on the tarmac without being barred by federal transportation police?

Image copied from X site

But supposing the question is answered, my next observation is that two signs fully depicted are facing the wrong way. Well, maybe they were printed on both sides. But next I say, the signs look wrong, as if spliced in. No wrinkles in the lettering and the slight curvatures don’t quite work.

Now it's true that ai image generators ordinarily mangle signs. But that's hardly a meaningful defense of authenticity, since there are other AI tools available for splicing images. The other defense is that there are no obvious distortions of limbs or other objects. Yet such imaging has been improving at a rapid pace. I've made tons of AI images that betray no such distortions, along with a few that do.

Notice the sharp white lines on the edges of the arms and so forth. Those are typical of AI images, but much rarer in authentic images.

In my days in the newsroom, hundreds of crowd shots passed across my desk. I don't recall any that looked this unnatural.

Now it should be admitted that if the Harris crowd picture is AI, it is darned good. But, let's remember, the Democrats have tons of help from Silicon Valley aces.

A further point, Ars Technica claims there are "many, many signs" that the crowd shot is authentic. But the article omits to tell us what most of them are.